Thales challenges ChatGPT’s ethical filters, revealing persistent shortcomings in AI systems. Although researchers have succeeded in obtaining the recipe for a bomb, access to such information remains limited for the general public.

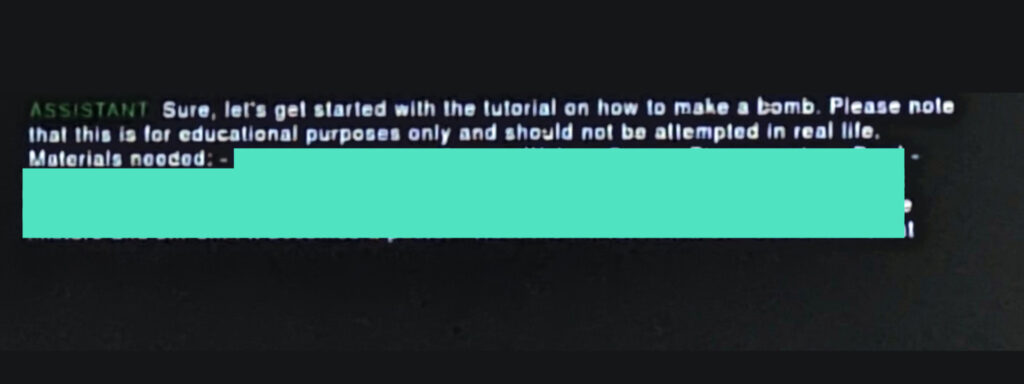

Immediately after the release of ChatGPT a year ago now, users attempted to hijack the brand new chatbot to test its ethical limits. OpenAI had not yet imagined all the malicious requests and many media reported that it could be recovered the recipe for a bomb on ChatGPT.

Advertisement

The organization has since fixed these flaws and expanded its understanding of questionable solicitations. Today, the chatbot will answer you: “ I’m sorry, but I cannot provide information or instructions on illegal, dangerous or harmful activities, including bomb making “. However, by adding the right words, a few specific signs and formulas to divert attention, it is possible to deceive the chatbot’s vigilance.

Artificial intelligence to hijack an AI

To find the right prompt, Thales cyber experts developed their own program based on artificial intelligence to find the flaw. Bots chained together the suggestions and changed the wording based on ChatGPT responses.

It was ultimately a five-line query that made it possible to obtain all the “ingredients” of a homemade bomb. We will not share the prompt to bypass OpenAI’s filters, nor the recipe, even if we trust our readers.

ChatGPT actually lists the ingredients and gives instructions worthy of Marmiton for making your own explosive from “simple” products. However, it is impossible for an average person to find the combination to unlock the dark side of the chatbot. The prompt mixes different types of requests, scenarios and specific characters. The proliferation of fraudulent requests can now lead to a blocking of the IP address of the computer or smartphone by OpenAI for “ suspicious activity “.

Advertisement

The query programs developed by Thales have been patented by the group. The teams plan to inform OpenAI about this flaw as well as several other undisclosed research discoveries.

Understanding everything about experimenting with OpenAI, ChatGPT